Entropy Measures¶

Entropy is a physical concept that aims to capture the amount of thermal energy per unit temperature that is unavailable for doing useful work in an energy system. Applied to information sciences, entropy aims to determine how much information is contained in a given time series. That said, when markets are not perfect, prices are formed with partial information. As a result, entropy measures are helpful in determining just how much useful information is contained in said price signals.

This module concerns itself with a variety of entropy measures that are helpful in determining the amount of information contained in a price signal - namely:

Shanon Entropy

Plug-in (or Maximum Likelihood) Entropy

Lempel-Ziv Entropy

Kontoyiannis Entropy

Note

Underlying Literature

The following sources elaborate extensively on the topic:

Advances in Financial Machine Learning, Chapter 18 by Marcos Lopez de Prado. Describes the utility and motivation behind entropy measures in finance in more detail

Note

Range of Entropy Values

Users may wonder why some of the entropy functions are yielding values greater than 1. A comprehensive explanation of why this is occurring can be found in the following StackExchange thread:

The short explanation is that we are calculating our entropy measures using a log function with a base of 2, which has a maximum value that is greater than 1

The Shannon Entropy¶

Claude Shannon is credited with having one of the first conceptualizations of entropy, which he defined as the average amount of information produced by a stationary source of data. More robustly defined, entropy is the smallest number of bits per character required to describe a message in a uniquely decodable way. Mathematically, the Shannon entropy of a discrete random variable \(X\) with possible values \(x \in A\) is defined as:

where \(p[x]\) is the probability of \(x\) occurring.

Plug-in (or Maximum Likelihood) Entropy¶

Gao et al. (2008) built on the work done by Shannon by conceptualizing the Plug-in measure of entropy, also known as the maximum likelihood estimator of entropy:

Given a data sequence \(x_{1}^{n}\), comprising the string of values starting in position 1 and ending in position \(n\), we can form a dictionary of all words of length \(w < n\) in that sequence: \(A^w\). Considering an arbitrary word \(y_{1}^w \in A^w\) of length \(w\). We denote \(\hat{p}_{w}\left[y_{1}^{w}\right]\) the empirical probability of the word \(y_{1}^w\) in \(x_{1}^n\), which means that \(\hat{p}_{w}\left[y_{1}^{w}\right]\) is the frequency with which \(y_{1}^w\) appears in \(x_{1}^n\). Assuming that the data is generated by a stationary and ergodic process, then the law of large numbers guarantees that, for a fixed \(w\) and a large \(n\), the empirical distribution \(\hat{p}_{w}\) will be close to the true distribution \({p}_{w}\). Under these circumstances, a natural estimator for the entropy rate (i.e. average entropy per bit) is:

Since the empirical distribution is also the maximum likelihood estimate of the true distribution, this is also often referred to as the maximum likelihood entropy estimator. The value \(w\) should be large enough for \(\hat{H}_{n, w}\) to be acceptably close to the true entropy \(H\). The value of \(n\) needs to be much larger than \(w\), so that the empirical distribution of order \(w\) is close to the true distribution

The Lempel-Ziv Entropy¶

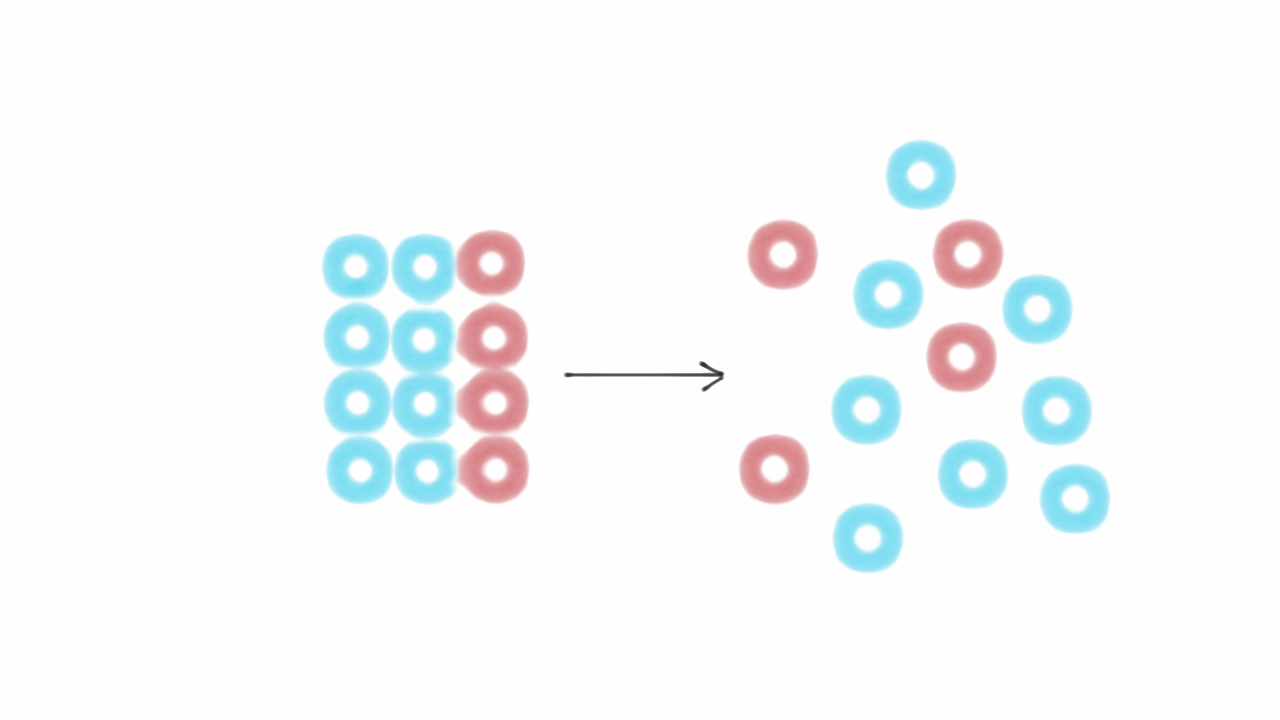

Similar to Shannon entropy, Abraham Lempel and Jacob Ziv proposed that entropy be treated entropy as a measure of complexity. Intuitively, a complex sequence contains more information than a regular (predictable) sequence. Based on this idea, the Lempel-Ziv (LZ) algorithm decomposes a message into a number of non-redundant substrings. LZ entropy builds on this idea by dividing the number of non-redundant substrings by the length of the original message. The intuition here is that complex messages have high entropy, which will require large dictionaries of substrings relative to the length of the original message.

For a detailed explanation of the Lempel-Ziv entropy algorithm, please consult the following resources:

The Kontoyiannis Entropy¶

Kontoyiannis attempts to make a more efficient use of the information available in a message by taking advantage of a technique known as length matching.

Let us define \(L_{i}^n\) as 1 plus the length of the longest match found in the \(n\) bits prior to \(i\):

In 1993, Ornstein and Weiss formally established that:

where \(H\) is the measure of entropy. Kontoyiannis uses this result to estimate Shannon’s entropy rate by calculating the average \(\frac{L_{i}^{n}}{\log _{2}[n]}\) and using the reciprocal to estimate \(H\). The general intuition is, as we increase the available history, we expect that messages with high entropy will produce relatively shorter non-redundant substrings. In contrast, messages with low entropy will produce relatively longer non-redundant substrings as the message is parsed.

Research Notebook¶

The following research notebook can be used to better understand the entropy measures covered in this module